The Image Quality Assessment (IQA) methods are developed to measure the perceptual quality of images. One of the most important applications of IQA is to measure the performance of image restoration algorithms. However, while new algorithms have been continuously improving image restoration performance, we notice an increasing inconsistency between quantitative results and perceptual quality. Especially, the invention of Generative Adversarial Networks (GAN) and GAN-based image restoration algorithms poses a great challenge for IQA, as they bring completely new characteristics to the output images. This also affected the development in the field of image restoration, as comparing them with the flawed IQA methods may not lead to better perceptual quality. In this paper, we contribute a new large-scale IQA dataset and build benchmarks for IQA methods.

NTIRE Perceptual Image Quality Assessment Challenge

Jointly with NTIRE 2021 workshop we have an NTIRE challenge on perceptual image quality assessment, that is, the task of predicting the perceptual quality of an image based on a set of prior examples of images and their perceptual quality labels. The challenge uses the proposed PIPAL dataset and its extended testset and has a single track. The aim is to obtain a network design / solution capable to produce high quality results with the best correlation to the reference ground truth.

Part of the original PIPAL dataset will be released as the training data, and an extension dataset with new GAN-based distortions will are collected as the testset. For more information:

- New Trends in Image Restoration and Enhancement workshop (NTIRE) 2021

- NTRIE 2021, Perceptual Image Quality Assessment Challenge

Please register for the competition to download the dataset.

Motivation

The most recent image restoration algorithms based on GANs have achieved significant improvement in visual performance, but also presented great challenges for quantitative evaluation. Notably, we observe an increasing inconsistency between perceptual quality and the evaluation results. Then we naturally raise two questions:

- Can existing IQA methods objectively evaluate recent IR algorithms?

- When focus on beating current benchmarks, are we getting better IR algorithms?

Benchmark

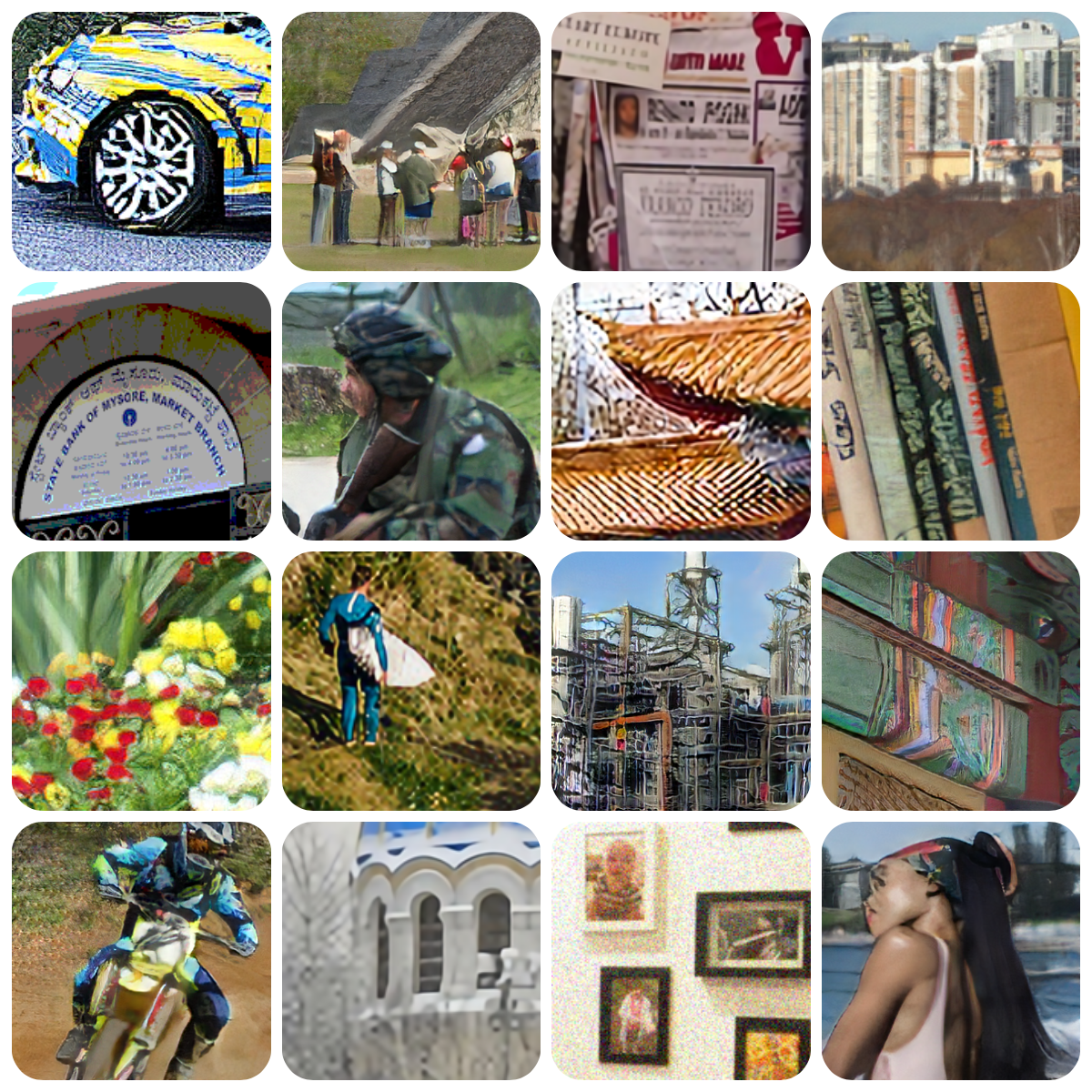

To understand the new challenges brought by these perceptual-driven algorithms, we construct a new IQA dataset that includes the results of these new algorithms. We term this dataset Perceptual Image Processing ALgorithms, PIPAL. PIPAL contains:

- 250 high quality reference images,

- 40 distortion types, including the output of GAN-based algorithms,

- 29,000 distortion images,

- 1,130,000 human ratings.

Demo Video

Citation

@InProceedings{pipal,

author = {Jinjin Gu, Haoming Cai, Haoyu Chen, Xiaoxing Ye, Jimmy Ren, Chao Dong},

title = {PIPAL: a Large-Scale Image Quality Assessment Dataset for Perceptual image Restoration},

booktitle = {European Conference on Computer Vision (ECCV) 2020},

year = {2020},

publisher = {Springer International Publishing},

pages = {633--651},

address = {Cham},

}

@inproceedings{gu2021ntire,

title={NTIRE 2021 challenge on perceptual image quality assessment},

author={Gu, Jinjin and Cai, Haoming and Dong, Chao and Ren, Jimmy S and Qiao, Yu and Gu, Shuhang and Timofte, Radu},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={677--690},

year={2021}

}

@article{gu2020image,

title={Image Quality Assessment for Perceptual Image Restoration: A New Dataset, Benchmark and Metric},

author={Gu, Jinjin and Cai, Haoming and Chen, Haoyu and Ye, Xiaoxing and Ren, Jimmy and Dong, Chao},

journal={arXiv preprint arXiv:2011.15002},

year={2020}

}

Acknowledgement: This work is partially supported by SenseTime Group Limited, the National Natural Science Foundation of China (61906184), Science and Technology Service Network Initiative of Chinese Academy of Sciences (KFJ-STS-QYZX-092), Shenzhen Basic Research Program (JSGG20180507182100698, CXB201104220032A), the Joint Lab of CAS-HK, Shenzhen Institute of Artificial Intelligence and Robotics for Society.

This page has been viewed